Hi! My name is Mingsheng Li (中文名: 李铭晟). I am currently a final-year Master’s student in Artificial Intelligence at Fudan University, advised by Prof. Tao Chen. I am also fortunate to work closely with Dr. Hongyuan Zhu from A*STAR, Singapore, Dr. Gang Yu, Dr. Xin Chen, and Dr. Chi Zhang from Tencent, and Dr. Bo Zhang from Shanghai AI Lab. Before this, I received my bachelor’s degree in Electronic Engineering from Fudan University in 2022.

I work in the fields of deep learning and computer vision, with particular focuses on large models, multi-modal learning and embodied AI. My research pursues to develop robust and scalable general-purpose AI systems to solve complex problems.

📣 I am actively looking for researcher / Ph.D. opportunities. Please check out my resume here.

🔥 News

- Sep. 2024. 🎉🎉 One Paper (3DET-Mamba) is accepted to NeurIPS 2024! Comming Soon)

- Jul. 2024. 🎉🎉 M3DBench is accepted to ECCV 2024.

- Jun. 2024. 🚀🚀 We release WI3D, the first approach that can generalize well-trained 3D detectors to learn novel classes with the aid of foundation models.

- Jun. 2024. 🎉🎉 Our LGD, a new method for lightweight model pre-training, is accepted to T-MM 2024. Code is released now!

- Apr. 2024. 🎉🎉 Our state-of-the-art 3D dense captioning method Vote2Cap-DETR++

, is accepted to T-PAMI 2024.

- Jul. 2024. 🚀🚀 We release M3DBench

, a new 3D instruction-following dataset with interleaved multi-modal prompts and a new benchmark to assess large models across 3D vision-centric tasks.

- Feb. 2024. 🎉🎉 Our Large Language 3D Assistant, LL3DA

, is accepted to CVPR 2024.

📝 Recent Works

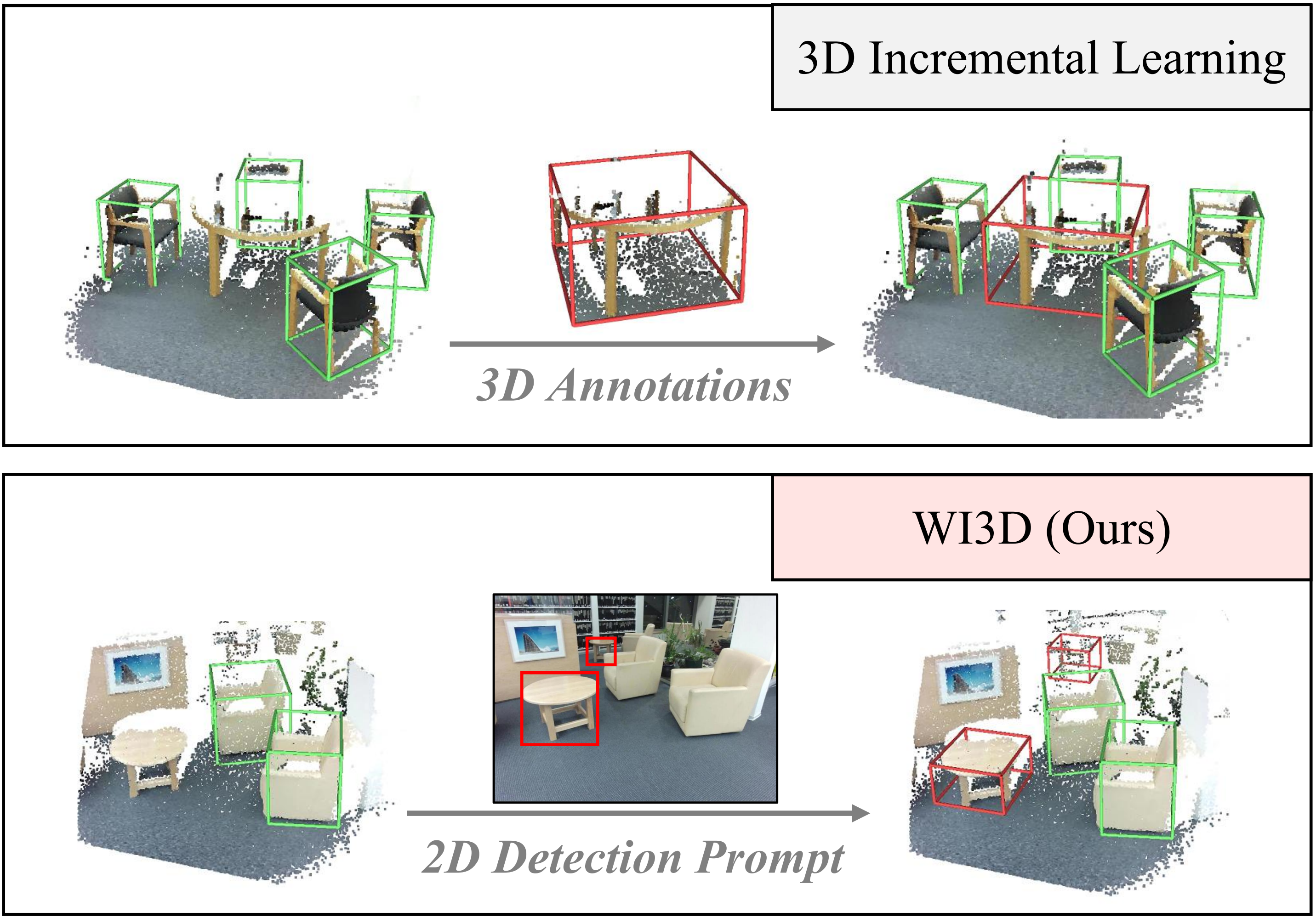

WI3D: Weakly Incremental 3D Detection via Vision Foundation Models

T-MM 2024

Mingsheng Li, Sijin Chen, Shengji Tang, Hongyuan Zhu, Yanyan Fang, Xin Chen, Zhuoyuan Li, Fukun Yin, Gang Yu, Tao Chen

- Introducing new categories to well-trained 3D detectors with 2D foundation models.

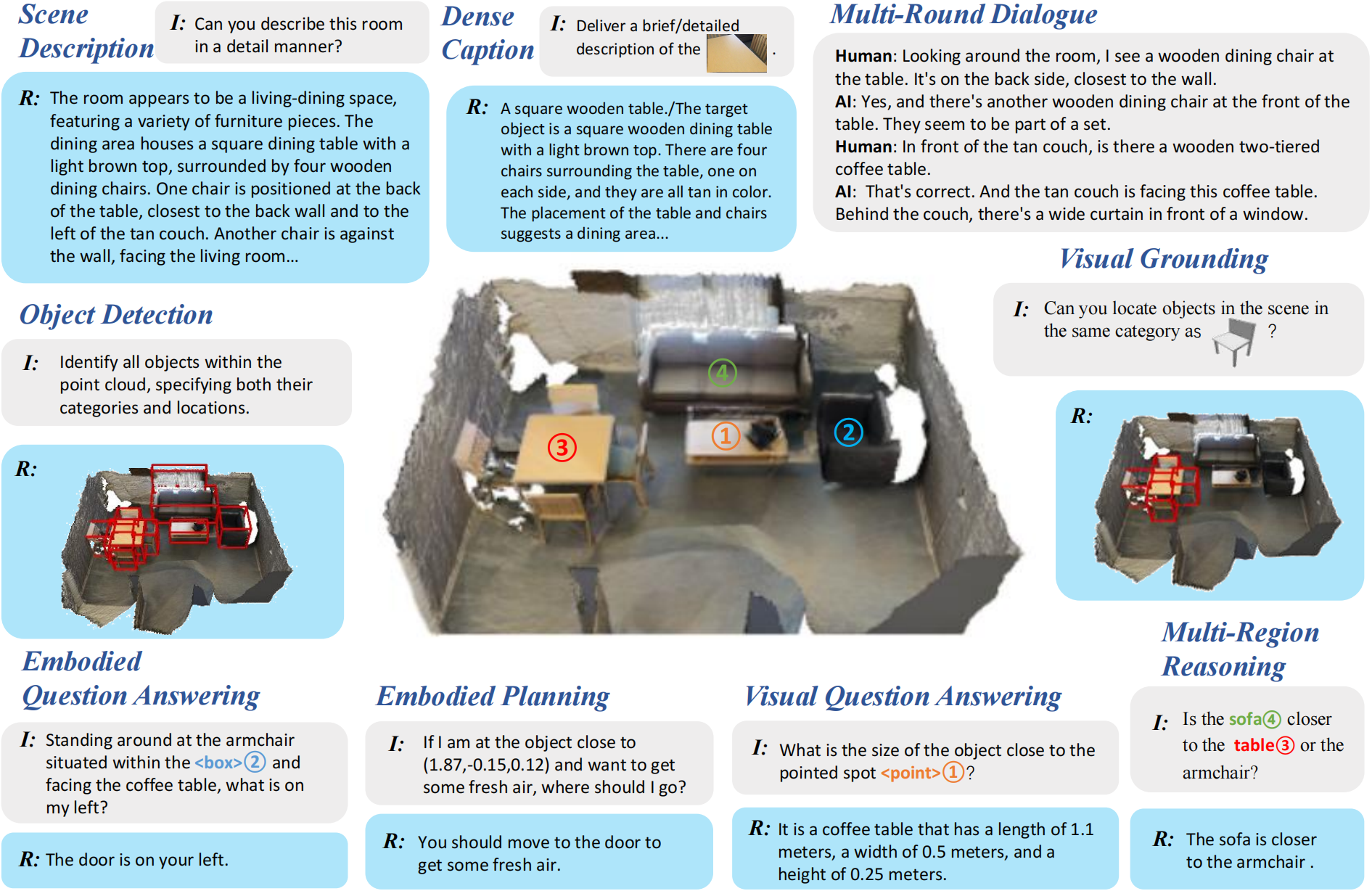

M3DBench: Let's Instruct Large Models with Multi-modal 3D Prompts

ECCV 2024 |

Mingsheng Li, Xin Chen, Chi Zhang, Sijin Chen, Hongyuan Zhu, Fukun Yin, Gang Yu, Tao Chen

- Propose a comprehensive 3D instruction-following dataset with support for interleaved multi-modal prompts.

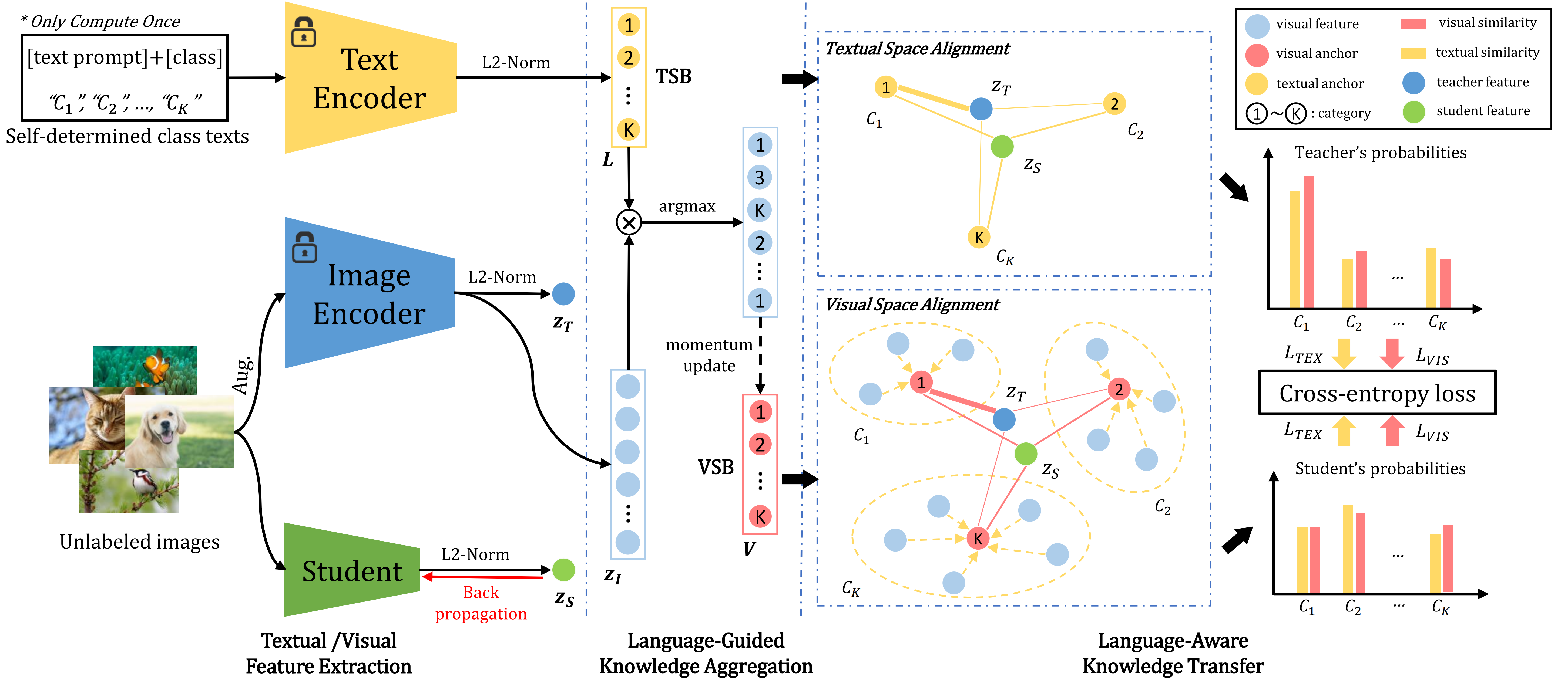

Lightweight Model Pre-training via Language Guided Knowledge Distillation

T-MM 2024

Mingsheng Li, Lin Zhang, Mingzhen Zhu, Zilong Huang, Gang Yu, Jiayuan Fan, Tao Chen

- Language-guided distillation enhances model pre-training.

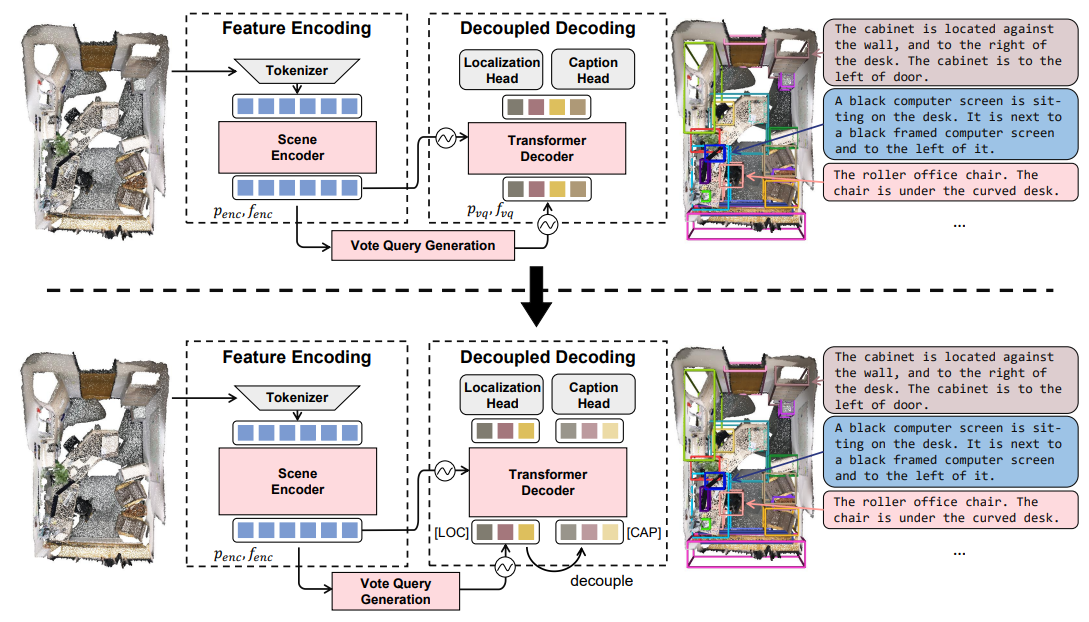

Vote2Cap-DETR++: Decoupling Localization and Describing for End-to-End 3D Dense Captioning

T-PAMI 2024 |

Sijin Chen, Hongyuan Zhu, Mingsheng Li, Xin Chen, Peng Guo, Yinjie Lei, Gang Yu, Taihao Li, Tao Chen

- Decoupled feature extraction and task decoding for 3D Dense Captioning.

LL3DA: Visual Interactive Instruction Tuning for Omni-3D Understanding, Reasoning, and Planning

CVPR 2024 |

Sijin Chen, Xin Chen, Chi Zhang, Mingsheng Li, Gang Yu, Hao Fei, Hongyuan Zhu, Jiayuan Fan, Tao Chen

project | arXiv | github | youtube

- Propose a Large Language 3D Assistant that responds to both visual interactions and textual instructions in complex 3D environments.

🥇 Awards and Scholarships

- 2024. National Scholarship (rank 1/244).

- 2023. 2nd Prize of Graduate Academic Scholarship.

- 2022. Outstanding Graduate of Fudan University.

- 2020. 2nd Prize of China Undergraduate Mathematical Contest in Modeling.

- 2020. STEM (Science, Technology, Engineering, Mathematics) Scholarship.

- 2019. 1st Prize of Chinese Mathematics Competitions (Top 20).

- 2019. National Encouragement Scholarship.

📖 Educations

- Sep. 2022 - Jun. 2025 (expected). Master student at Fudan University.

- Sep. 2018 - Jun. 2022. Bachelor student at Fudan University.

💻 Internships

- 2024.01 - Present. Intern Researcher, Shanghai AI Lab, Shanghai, China.